What is GPU Acceleration?

GPU acceleration refers to the use of a graphics processing unit (GPU) along with a CPU to accelerate computing tasks and applications.

They are specialized electronic circuits that are highly effective at processing graphics and other parallel workloads. By offloading compute-intensive tasks to the GPU, the overall system performance can be substantially improved.

For example, a GPU can be used to accelerate tasks such as video encoding, machine learning, and scientific computing.

Moreover, they are also increasingly being used to accelerate consumer applications such as video games and image editing software.

Why is GPU Acceleration Important?

GPU acceleration has become extremely important in today’s computing landscape for several reasons:

- Performance: GPUs can process certain workloads much faster than CPUs alone. For tasks like rendering graphics, training neural networks, or running simulations, a GPU can provide 10-100x speedup over using the CPU alone. This performance benefit is critical in fields like gaming, AI, scientific computing, and more.

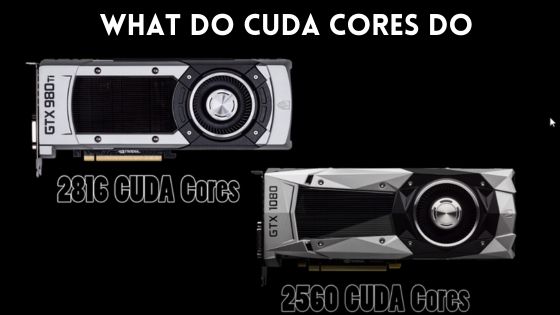

- Scalability: Scaling up performance by adding more CPUs has limitations due to hardware constraints and complexity. GPUs are more scalable, allowing hundreds of cores to work in parallel on separate tasks.

- Energy efficiency: GPUs provide higher performance per watt compared to CPUs. This improves energy efficiency and reduces power consumption, making GPU acceleration ideal for data centers, supercomputers, mobile/embedded devices.

- Compatibility: Major frameworks like TensorFlow, PyTorch, and platforms like NVIDIA CUDA make it easy to leverage GPU acceleration. The hardware and software ecosystem around GPU computing is mature.

GPU acceleration has become a crucial enabling technology across industries. Any application that needs additional computing horsepower can benefit from incorporation of GPU acceleration.

Benefits of GPU Acceleration

There are several key benefits that GPU acceleration provides:

Increased Performance

The parallel architecture of GPUs allows them to perform certain computations much faster than even the most advanced CPUs.

Certain workloads like graphics rendering, deep learning, cryptography, and simulations see huge performance gains from GPU acceleration, often 10-100x speedup over CPUs.

For example, deep learning models can train significantly faster by leveraging hundreds or thousands of GPU cores in parallel.

This enables quicker model iteration and deployment in applications like computer vision, natural language processing, and more.

Reduced Power Consumption

GPUs provide higher throughput per watt compared to CPUs.

Offloading intensive computations to the GPU frees up the CPU and avoids maxing out CPU utilization. This results in lower power draw overall for the same amount of computation.

The energy efficiency of GPU acceleration is extremely useful in applications like AI/ML data centres and supercomputers where electricity costs can be prohibitively high.

It also enables deployment of high performance computing in embedded and mobile form factors.

Improved Scalability

Scaling up parallel processing power by simply adding more CPUs has practical limitations in terms of hardware costs, complexity, and efficiently utilizing all cores.

GPUs are designed specifically for massively parallel workloads and can scale to support thousands of computational cores on a single chip.

This scalability makes it easy to increase computing power for GPU-accelerated applications by adding additional GPUs.

Scaling with GPUs is more cost-effective and efficient compared to expanding CPU-only compute clusters.

Who Can Benefit from GPU Acceleration?

Many industries and applications can benefit from the power of GPU acceleration:

- Gaming: GPUs help generate smooth frame rates and detailed graphics in video games. Modern game engines rely extensively on GPU acceleration.

- Scientific Computing: Simulations, molecular modeling, weather forecasting – GPUs significantly accelerate scientific workloads and high performance computing.

- AI/Machine Learning: Deep learning relies on GPU acceleration during the computationally intensive model training process. Self-driving cars, facial recognition, and other AI applications depend on GPUs.

- Finance: The parallel processing capacity of GPUs speeds up risk analysis, algorithmic trading platforms, fraud detection models that rely on machine learning.

- Biotechnology: GPU accelerated computing helps rapidly analyze DNA sequencing data and aids biological research.

- Engineering: From computer aided engineering and analysis to computational fluid dynamics, GPU acceleration provides a boost across the engineering field.

- Creative Applications: GPU acceleration is crucial for video editing, 3D rendering, design software to smoothly handle high resolution media files.

Essentially any industry that relies on simulations, visualized data, or machine learning can benefit from incorporation of GPU technology. GPU computing has become indispensable across both consumer and enterprise applications.

How Does GPU Acceleration Work?

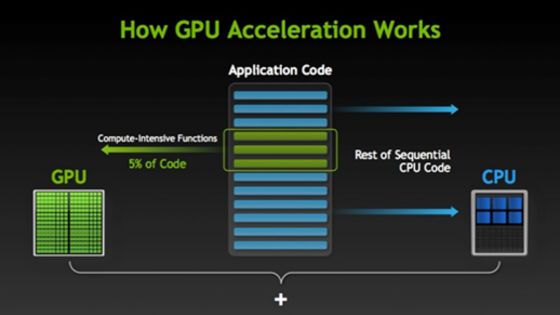

At a high level, GPU acceleration works by offloading compute or data parallel portions of an application to the GPU.

The GPU can process this workload in parallel across its thousands of cores. Here are the typical steps:

- Data is copied from CPU memory over to GPU memory.

- The application invokes parallel GPU functions called kernels.

- Many GPU threads run the kernels on different data elements concurrently.

- GPU performs required computations, accessing on-chip cache and memory as needed.

- Results are transferred back to CPU memory for any additional processing or storage.

This offloading of parallel segments lets the host CPU focus on general serial tasks. The workload is divided between CPU and GPU based on their respective strengths.

Popular frameworks like CUDA, DirectCompute, OpenCL, etc. handle the GPU interaction details so developers can focus on the computational code.

The results are GPU accelerated applications that benefit from massive parallelism, especially for tasks with high arithmetic intensity.

Examples of Tasks That Can be Accelerated With a GPU

- 3D Rendering: Shader programs harness GPU cores to render scenes with lighting, textures rapidly.

- Neural Network Inference: Massively parallel GPUs excel at evaluating already trained deep learning models for classification/prediction.

- Video Encoding/Decoding: GPUs speed up compression and decompression of high resolution videos by processing frames in parallel.

- Physical Simulations: Complex simulations of weather, fluid dynamics, molecular dynamics are accelerated by GPUs.

- Cryptocurrency Mining: The immense parallel processing power of GPUs significantly speeds up cryptomining computations.

- Genomics: GPU’s support running genomics and DNA sequencing algorithms much faster than with CPUs alone.

- Mathematical Modeling: Financial/quantitative models with large matrices and floating point operations run massively faster on GPUs.

Any application with elements that can be parallelized and scaled across thousands of threads will see huge performance benefits from incorporating GPU acceleration.

How to Get Started with GPU Acceleration

Here are some tips on how to get started with GPU acceleration for your applications:

- Determine parallelizable segments: Profile code to identify hotspots suitable for parallelization on GPUs. Focus on repetitive computations operating on different data elements that can run concurrently.

- Select a platform: Popular options include CUDA, OpenCL, DirectCompute, etc. Evaluate ease of integration, hardware support, and language preferences.

- Target algorithms wisely: The complexity and flexibility of algorithms may need to be optimized for efficiently mapping onto GPU architecture.

- Allocate GPU memory: Copy relevant data over from CPU memory into GPU memory for fastest access during computation.

- Develop and optimize kernels: These are the key parallel functions that will be executed on the GPU.

- Refine based on profiling: Use profilers to identify and overcome bottlenecks related to GPU utilization, memory transfers, kernel tuning etc.

With the right algorithms, a bit of profiling and optimization, applications from diverse domains can benefit from order-of-magnitude speedups via GPU acceleration.

Examples of GPU Acceleration in Use

Let’s look at some real world examples of how GPU acceleration is delivering tangible benefits:

Healthcare

NVIDIA’s Clara Parabricks utilizes GPU acceleration to analyze whole human genomes up to 80x faster compared to CPU-only methods. This enables hospitals to provide genetic analysis results in just hours instead of weeks.

Manufacturing

BMW uses NVIDIA DGX systems for GPU-accelerated analysis of vibration measurements from vehicle gear testing to significantly reduce physical prototyping time.

Cloud Computing

AWS, Google Cloud, and Azure provide GPU-powered cloud instances to accelerate machine learning, graphics, HPC workloads for cloud users. Top cloud providers rely extensively on GPUs.

Finance

JPMorgan Chase uses GPU clusters to accelerate Monte Carlo risk simulations. This allows analyzing portfolio risks 4000x faster than previous systems.

Meteorology

Japan’s weather bureau runs GPU-accelerated supercomputer simulations to provide highly granular and localized weather forecasts and modeling.

Research

Oak Ridge National Lab’s Summit supercomputer uses 27,000 NVIDIA GPUs along with CPUs to achieve 200 Petaflops of computing power for diverse research applications.

Here is a summary of Summit’s key specifications:

- Peak performance: 200 petaflops

- CPUs: 9,216 IBM Power9 CPUs

- GPUs: 27,648 NVIDIA Volta GPUs

- Memory: 10 petabytes

- Storage: 250 petabytes

The performance benefits of GPU acceleration are being leveraged across consumer and enterprise applications in every industry.

Troubleshooting GPU Acceleration Problems

Here are some tips for troubleshooting common problems with GPU accelerated applications:

- Verify hardware compatibility: Ensure GPU, drivers, OS, platforms (CUDA, etc) match specifications.

- Check errors logs: Logs from APIs like CUDA and OpenCL can indicate setup or coding issues.

- Profile utilization: Low GPU utilization indicates optimization opportunities in parallelization or memory transfers.

- Tune kernels: Adjust kernel configurations and block/grid sizes to maximize parallelism and hardware concurrency.

- Optimize memory: Minimize data transfers between CPU and GPU. Leverage caches effectively and optimize memory access patterns.

- Simplify algorithms: Reduce complexity to improve parallel mappings. Use profiling insights to identify bottlenecks.

- Upgrade software: Keeping drivers, frameworks, and languages (Python, etc) up to date can resolve compatibility issues.

- Compare hardware: Test across GPUs from different generations to compare performance and diagnose architectural limitations.

With careful profiling, optimization, and testing, the majority of GPU acceleration issues can be diagnosed and resolved to unlock substantial speedups.

Conclusion

GPU acceleration leverages the massive parallel processing power of graphics processing units to accelerate diverse computing workloads. They are specialized circuits architected specifically for high throughput floating point and matrix math.

By offloading parallel segments of code to the GPU, applications can see order-of-magnitude speedups over CPU-only implementations. The benefits include improved performance, power efficiency, and scalability.

Industries from gaming and graphics to deep learning, cryptography, simulations, finance, and more are powered by GPU acceleration today.

As applications become more complex, GPU acceleration will become indispensable for achieving real-time performance and efficiency.

With the right algorithms and optimizations, GPUs will remain a driving force in pushing application capabilities to new levels across consumer and enterprise computing.

FAQs

Should I turn off GPU acceleration?

No, you should leave GPU acceleration enabled. GPU acceleration uses your graphics card to process and render visual effects more efficiently. Turning it off forces the CPU to handle all graphics tasks, resulting in slower performance.

What is the difference between graphics accelerator and GPU?

A graphics accelerator is a broader term that refers to any hardware component that accelerates graphics operations. GPU or graphics processing unit is a specific type of graphics accelerator – a dedicated chip designed to handle graphics workloads.

Does hardware acceleration reduce quality?

No, hardware acceleration does not reduce visual quality. It offloads graphics processing to the GPU, freeing up the CPU and system resources to maintain quality. Well-implemented hardware acceleration can even improve quality.

What is the best GPU for GPU acceleration?

NVIDIA and AMD GPUs designed for gaming, machine learning and content creation provide the best performance for GPU accelerated tasks like video editing, 3D modeling, game development etc. High-end GPUs have more cores and memory to handle parallel workloads.

What is RTX acceleration?

NVIDIA RTX acceleration refers specifically to hardware acceleration features on NVIDIA’s RTX series GPUs. This includes accelerated ray tracing, AI processing, programmable shading and other capabilities to speed up advanced graphics and effects.

Does hardware acceleration slow down PC?

No, proper hardware acceleration does the opposite – it speeds up your PC by offloading intensive graphics tasks to your GPU instead of your CPU. The GPU is designed to handle these parallel workloads much more efficiently.

Does hardware acceleration use more RAM?

Hardware acceleration requires some additional VRAM on your GPU. But it reduces the load on your overall system RAM by shifting graphics work to dedicated GPU memory. The performance benefits outweigh the small VRAM requirement.